Introduction

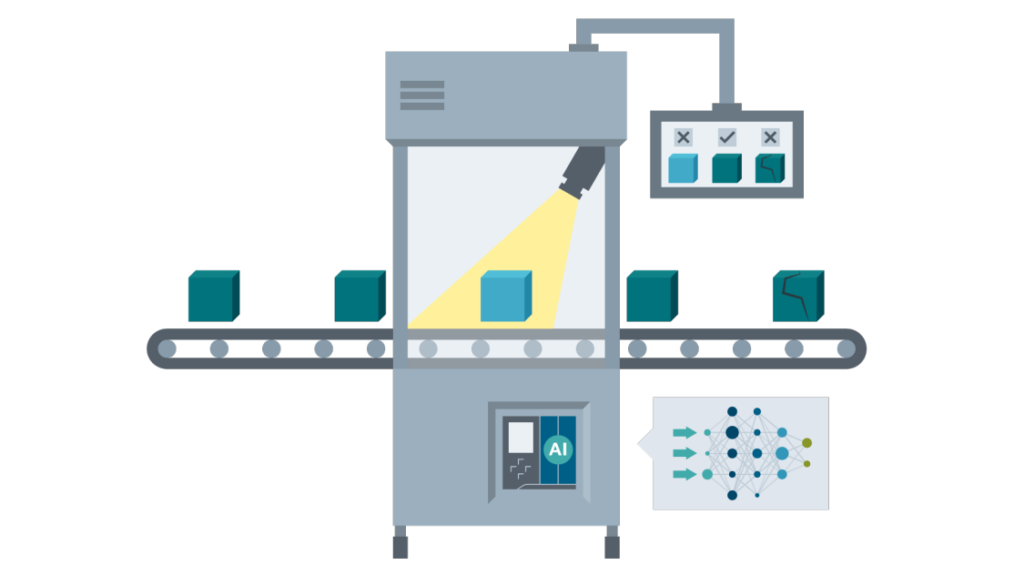

Visual inspection has always been a cornerstone of quality control in industrial environments.

It is used to detect defects and anomalies in products and parts quickly and precisely, ensuring that quality standards are maintained and any potential issues down the production line are avoided.

Now, with the help of machine learning and artificial intelligence, automated inspection is possible.

This article will explain how a machine-learning algorithm can be used for automated inspection with little to no human interaction.

Anomaly Detection Model

For anomaly detection, we are using a one-class learning task model.

This means that only the nominal images (non-anomalous) are used when training, thus reducing the effort needed to collect data compared to other supervised approaches.

The model has a pyramid-like architecture that helps to identify anomalies of different sizes.

The difference between the student and teacher-generated features is used to score the probability of an anomaly on a pixel level, allowing for precise localization of the anomaly within the image.

Anomalib

We use anomalib for our experiments. The library has a collection of advanced deep-learning detection algorithms and benchmarking tools on public and private datasets. It also has tools for exporting models to Intel hardware for faster performance.

Dataset

MVTec Anomaly Detection Dataset is the perfect resource for anyone looking to benchmark anomaly detection methods, especially those related to industrial inspection.

With over 5000 high-resolution images split into 15 different categories, you’ll have access to a wide range of defect-free training images and test sets with various kinds of defects.

All images are free of flaws, making it easy to compare different methods and get the most accurate results.

Results

We tested our deep learning model on the MVTec dataset, an image recognition dataset that consists of 7 different categories.

We used a t3.xlarge AWS EC2 instance with 4 Intel Xeon Platinum 8175M @ 2.50GHz vCPUs and 8GB of RAM. The input image size was set to 244×244, and the model’s hyperparameters were left to their default values.

The model was composed of two parts: a teacher model, a ResNet18 pretrained on the Imagenet dataset, and a student model, also a ResNet18 model that was initialized randomly.

Below are the results of the training for each of the categories.

You’ll find the metrics for each class as well as the amount of time it took to train the model for each category.

The model achieved very high scores, even reaching a perfect score in the leather category – which shows its potential for use in a real-world setting. The inference throughput was measured using a Pytorch implementation.

Output prediction samples

Some results on test images for the bottle category are shown next.

OpenVINO (Framework for increasing inference speed on CPU only)

Additionally, we tested the model trained on the bottle category to get its inference throughput on OpenVINO using its built-in benchmark tool obtaining roughly 30.2 fps, showing an improvement of almost 4 times the inference speed with respect to the plain Pytorch implementation.

Conclusion

Automated visual inspection can be a powerful tool for industrial processes, allowing more accurate and efficient detection of defects and anomalies.

With the help of modern technologies such as machine learning and artificial intelligence, it is now possible to detect problems in images with little to no human input.

Our tests on the data set showed promising results, with the model achieving very high scores, even reaching a perfect score in the leather category.

The speed of the process was also measured and showed good results.

This is a great step forward in the use of automated visual inspection in the industrial sector.