TL;DR

Cloning voice in Spanish without an expensive and long training process is possible. Here is an example of what it can generate:https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2F1Oi8bGTWZG4%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3D1Oi8bGTWZG4&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2F1Oi8bGTWZG4%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeAudio example from the voice cloning algorithm trained in Spanish

Introduction

First things first, what is TTS? TTS is Text To Speech, that means for example that from a text input we can generate speech or audio. Some of the elements we need to understand first are: Mel Spectrograms, STFT Spectrograms, Waveform.

Mel Spectrograms

A spectrogram is a visual way of representing a signal strength, or “loudness”, of a signal over time at various frequencies present in a particular waveform. Not only can one see whether there is more or less energy at, for example, 2 Hz vs 10 Hz, but one can also see how energy levels vary over time. In short is a way to represent audio as an image: here there’s an image of one to exemplify:

STFT Spectrograms (short-time Fourier transform spectrograms)

It is a Fourier-related transform used to determine the sinusoidal frequency and phase content of local sections of a signal as it changes over time. In practice, the procedure for computing STFTs is to divide a longer time signal into shorter segments of equal length and then compute the Fourier transform separately on each shorter segment. In short it is another type of spectrogram which is easier to transform back to audio.

Waveform

It is a curve showing the shape of a wave at a given time. For neural networks it is more difficult to process waveform than spectrograms for example. By using spectrograms instead, all the convolutional framework can be applied to audio processing. It is the well known audio waves. Here is an image of it:

Voice Cloning Algorithm

OK, so know we have a little bit more of an idea of some concepts involved into audio generation, let’s go ahead and have a little bit of an explanation of the method I used for the voice cloning. The original github repo link is: https://github.com/tugstugi/pytorch-dc-tts. The model it implements is ‘Efficiently trainable text-to-speech system based on deep convolutional networks with guided attention’ by Hideyuki Tachibana, Katsuya Uenoyama and Shunsuke Aihara.

Most TTS algorithms have two differentiated parts, the Syntethizer and the Vocoder. In the case of multi-speaker models there is also a speaker encoder.

Here is an image of the model architecture:

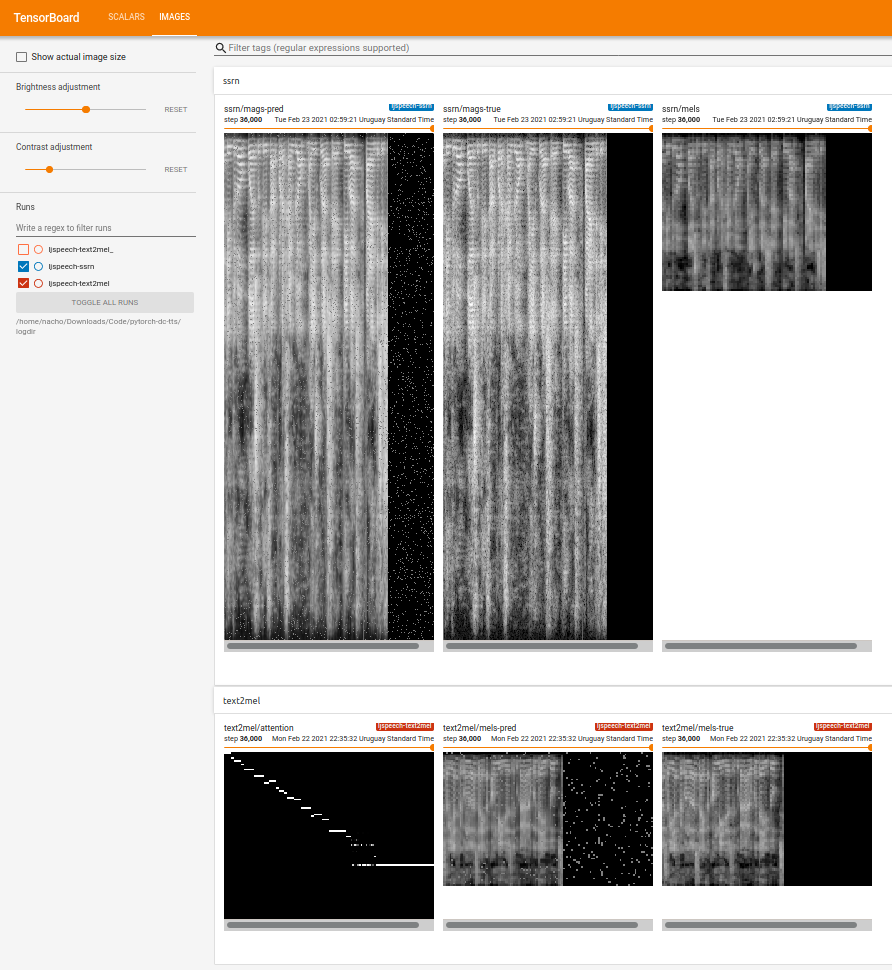

Text2Mel (Synthesizer)

The first part of the pipeline is the Text2Mel model. In it we have an encoder which uses attention and convolutional neural networks to transform the text into a low res mel spectrogram. This first model is called Text2Mel. This model uses dilated convolutions (a specific type of convolutional neural network) to generate the mel spectrogram. In this case Text2Mel takes the function of the Synthesizer.

Vocoder

Here the vocoder is formed by two components, the SSRN and RTISI-LA algorithms.

SSRN (Spectrogram Super-resolution Network)

This model receives the low res spectrogram generated in the last step and up-scales it to a higher resolution, generating a STFT spectrogram.

RTISI-LA (Real time iterative spectrum inspection with look ahead)

This algorithm transforms a STFT spectrogram back to audio waveform.

Training Process

For the training process, we used tux-100 dataset which is a dataset of 100 hours of audio in spanish. For hard drive constraints I was only able to use 35 hours of audio from the dataset which was sufficient for the task. Training using a model trained from a different language has it’s complications. That’s why we trained the model from scratch, the vocabulary wasn’t the same on this case so we had to work with the weights which was more complicated than training from scratch. After that we started seeing good results and audio was clear in Spanish we moved forward for the client custom voice cloning.

For the custom train we took video transcripts from youtube and made a dataset in LJSpeech format. Using this dataset we did transfer learning using the original model. After a very small number of epochs, the model started cloning the voice of the speaker. One thing to consider is that if the speaker dataset is limited, if you over train the model the results will get worse over time. For this reason, we checked by generating audio the best model for the task.

That’s it, that is how we trained a custom voice cloning algorithm for one of our clients. If you have a project you want to complete related to audio be free to send us a message at hello@dynamindlabs.ai. We will be more than glad to discuss it with you. We will be releasing more audio related posts here on Medium.

For now thanks for reading! 👋